How OpenAI Scaled Kubernetes to 7,500 Nodes by Removing One Plugin

The one change that improved OpenAI's network.

One of the biggest names in AI tripled the size of its infrastructure, going from 2,500 nodes to 7,500 in just a few years.

To put that into perspective, the average enterprise company will do fine with around 50-100 nodes.

This enormous increase in computing power worked because of lots of changes.

But the most important one was removing a plugin called Flannel.

Why? Let’s dive into it.

Estimated reading time: 3 minutes 59 seconds

What is Flannel?

Imagine we have a Kubernetes cluster that contains two nodes (virtual computers), one for a Node.js web server, another for a Postgres database.

Inside each of these nodes we'll have two pods (containers), one for the web server/database and something to collect logs like Vector.

Sidenote: Node vs Pod

Pods usually contain a single application (multiple if needed), and a node provides resources for the pod to run, CPU, Memory etc.

Nodes get assigned an IP address by the cloud provider. Pods do get assigned IPs by default using kubnet, but are used to communicate only within the same node.

If you want pods in different nodes to communicate, HTTP is a good approach.

But before you can even do that, you'll need to give each pod a unique IP address so they know where to send data.

A bit like giving someone a unique postal address so you're able to send letters to them.

Pod-to-pod communication between nodes out-of-the-box with Kubernetes is not straightforward, and this is where Flannel comes in.

Flannel exists to make pod-to-pod communication really easy. It configures the network for each pod and does things like assigns each pod an IP address.

In the world of Kubernetes, Flannel is called a CNI plugin (Container Network Interface). It's not the only one; there are others like Calico and Cilium.

But Flannel is the easiest to setup.

Why Was it Removed?

Flannel is a great solution, but it wasn't designed for thousands of nodes.

OpenAI was already seeing slow speeds with Flannel for 2,500 nodes, so 7,500 nodes would have definitely been a struggle.

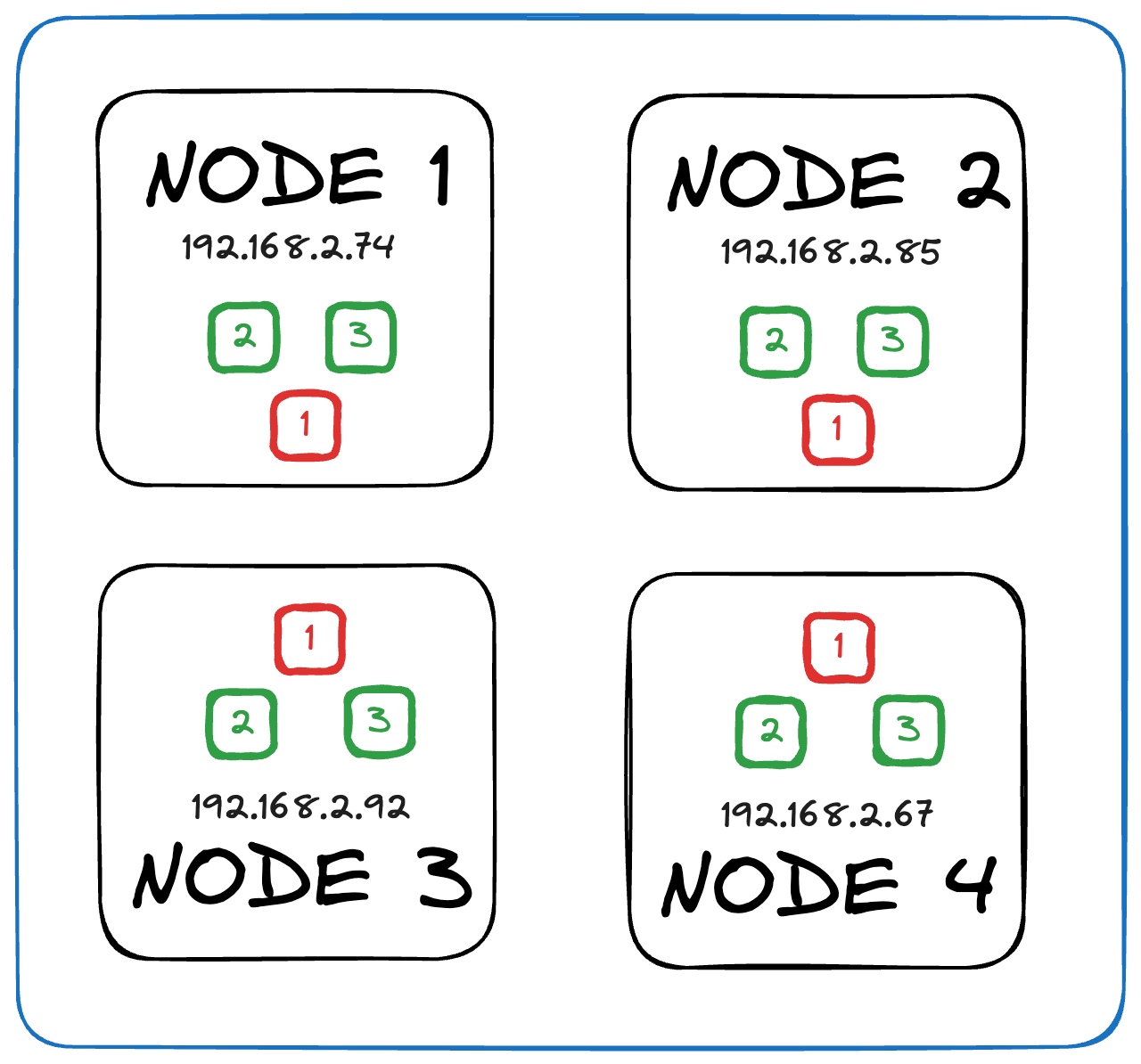

To fully understand what made it slow, let's go back to our previous example and double the number of nodes.

For communication between nodes, Flannel performs several tasks:

Assigns each cluster a range of IP addresses for each node and a range of IPs for nodes to give to each pod (subnet allocation).

Creates route tables to keep track of which node has which IP address.

With this information, if data needs to be sent to a specific pod, Flannel will know the exact IP address of the node to which the pod belongs to (traffic routing).

It also labels traveling data (packets) so that if it needs to pass through a cluster to reach its destination, it is not read by accident (packet encapsulation).

The aim of Flannel is to speed up communication, but with all these processes on top of thousands of nodes, it actually slows down the network.

As shown in the image below, with 150,000 requests per second (RPS), Flannel’s VXLAN tends to have the worst performance.

Of course, the team at OpenAI needed to do something about this.

Sidenote: VXLAN

VXLAN (Virtual eXtensible Local Area Network) is used to create a virtual network that allows machines to communicate.

Long before the internet, if you had two physical computers, you would have to connect a cable between them so they could communicate.

A VXLAN simulates this using the power of the internet or a larger network.

If two computers are connected to the internet and have VXLAN software installed, they each get a unique IP address, and packets are encapsulated so that it’s not viewed by another machine.

What Was Used Instead?

If you keep up with OpenAI news, or just AI news in general, you'll know that Microsoft has put a lot of money behind them.

You may also know that Microsoft owns Azure, which is a huge cloud provider.

So it only makes sense that OpenAI hosts all of its infrastructure on Azure. I mean, it would be weird if they hosted it on Google Cloud or AWS, right?

Since OpenAI was using the Azure Kubernetes Service (AKS) for their infrastructure, using Azure's CNI was the obvious choice, as both services work better together.

Azure's CNI isn't just a fork of Flannel with Microsoft branding; it's different in many ways:

Both nodes and pods are given IP addresses from the same range (subnet allocation). For example, if a node has IP 10.0.0.1, the pod gets 10.0.0.2, allowing direct pod-to-pod communication without going through a node.

It doesn't use a virtual network (VXLAN); it uses direct routing. This means no virtual routes need to be created, and there's no encapsulation of packets.

It is not a general-purpose CNI; it is specifically designed for Azure Kubernetes Service, meaning it has native integration and better performance with an AKS cluster.

All of these changes made Azure CNI much faster than Flannel, but it's not the same as Flannel.

The team had to use a different solution to keep track of packets and rely on a different routing engine.

However, the benefits outweigh the drawbacks.

OpenAI even claimed that pod-to-pod communication between clusters was as fast as direct communication between pods in the same node.

And That’s a Wrap

It's amazing to think that this whole article was just one paragraph of the original post.

There's a lot of good information in there, and I recommend you check it out.

But if you enjoyed this simplified format, go ahead and subscribe to get more like this.

PS: Enjoyed this newsletter? Please forward it to a pal or follow us on socials (LinkedIn, Twitter, YouTube, Instagram). It only takes 10 seconds. Making this one took 20 hours.