How Dropbox Saved Millions of Dollars by Building a Load Balancer

Dropbox saved resources by creating a superior version of a tool everyone uses

Dropbox is a cloud-based storage service that is ridiculously easy to use.

Download the app and drag your files into the newly created folder. That's it; your files are in the cloud and can be accessed from anywhere.

It sounds like a simple idea, but back in 2007, when it was released, there wasn't anything like it.

Today, Dropbox has around 700 million users and stores over 550 billion files.

All these files need to be organized, backed up, and accessible from anywhere. Dropbox uses virtual servers for this. But they often got overloaded and sometimes crashed.

So, the team at Dropbox built a solution to manage server loads.

Here's how they did it.

Estimated reading time: 5 minutes 15 seconds

Why Dropbox Servers Were Overloaded

Before Dropbox grew in scale, they used a traditional system to balance load.

This likely used a round-robin algorithm with fixed weights.

So, a user or client would upload a file. The load balancer would forward the upload request to a server. Then, that server would upload the file and store it correctly.

Sidenote: Weighted Round Robin

A round-robin is a simple load-balancing algorithm. It works by cycling requests to different servers so they get an equal share of the load.

If there are three servers, A, B, C, and three requests come in. A gets the first, B gets the second, and C gets the third.

Weighted round robin is a level up from round robin. Each server is given a weight based on its processing power and capacity.

Static weights are assigned manually by a network admin. Dynamic weights are adjusted in real time by a load balancer.

The higher the weight, the more load the server gets.

So if A has a weight of 3, B has 2, C has 1, and there were 12 requests. A would get 6, B would get 4, and C would get 2.

But there was an issue with their traditional load balancing approach.

Dropbox had many virtual servers with vastly different hardware. This made it difficult to distribute the load evenly between them with static weights.

This difference in hardware could have been caused by Dropbox using more powerful servers as it grew.

They may have started with an average server. As it grew, the team acquired more powerful servers. As it grew more, they acquired even more powerful ones.

At the time, there was no off-the-shelf load-balancing solution that could help. Especially one that used a dynamic weighted round-robin with gRPC support.

So, they built their own, which they called Robinhood.

Sidenote: gRPC

Google Remote Procedure Call (gRPC) is a way for different programs to talk to each other. It's based on RPC, which allows a client to run a function on the server simply by calling it.

This is different from REST, which requires communication via a URL. REST also focuses on the resource being accessed instead of the action that needs to be taken.

But gRPC has many more differences between REST and regular RPC.

The biggest one is the use of protobufs. This file format developed by Google is used to store and send data.

It works by encoding structured data into a binary format for fast transmission. The recipient then decodes it back to structured data. This format is also much smaller than something like JSON.

Protobufs are what make gRPC fast, but also more difficult to set up since the client and server need to support it.

gRPC isn't supported natively by browsers. So, it's commonly used for internal server communication.

The Custom Load Balancer

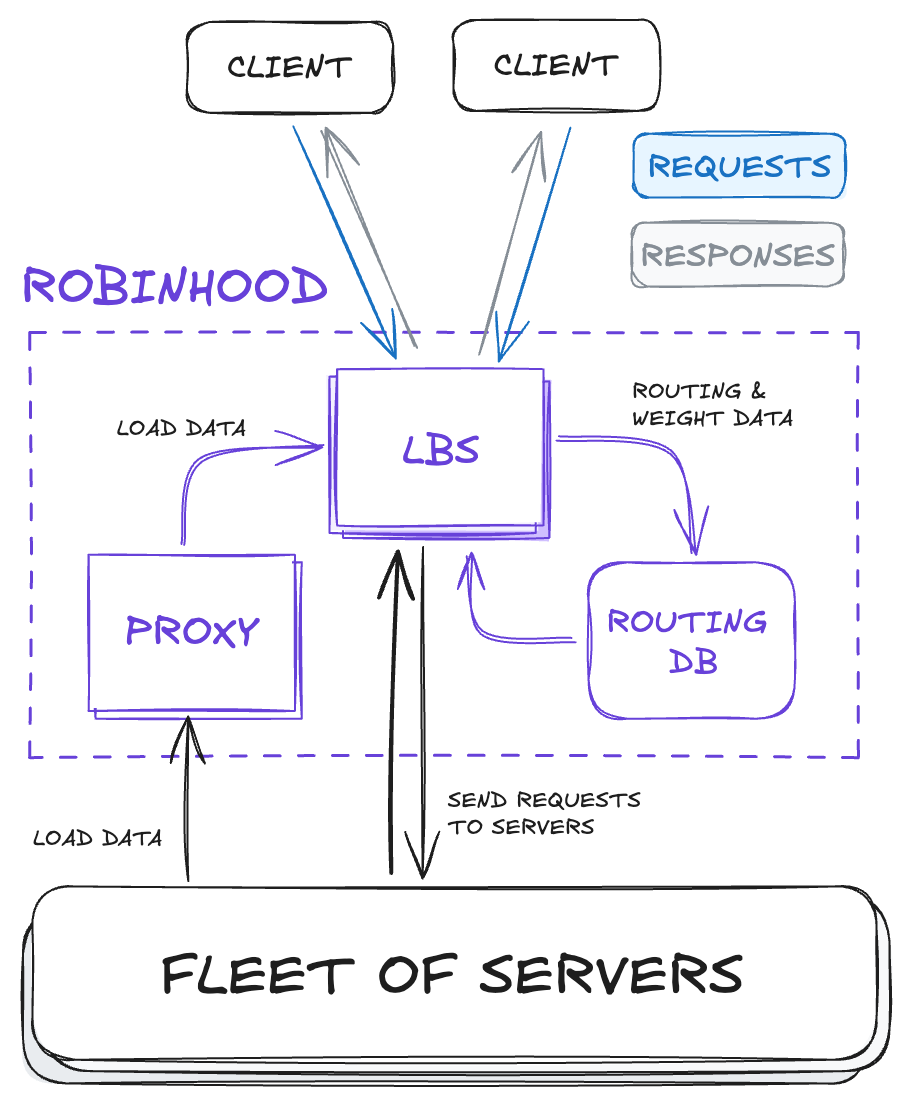

The main component of RobinHood is the load balancing service or LBS. This manages how requests are distributed to different servers.

It does this by continuously collecting data from all the servers. It uses this data to figure out the average optimal resource usage for all the servers.

Each server is given a PID controller, a piece of code to help with resource regulation. This has an upper and lower server resource limit close to the average.

Say the average CPU limit is 70%. The upper limit could be 75%, and the lower limit could be 65%. If a server hits 75%, it is given fewer requests to deal with, and if it goes below 65%, it is given more.

This is how the LBS gives weights to each server. Because the LBS uses dynamic weights, a server that previously weighted 5 could become 1 if its resources go above the average.

In addition to the LBS, Robinhood had two other components: the proxy and the routing database.

The proxy sends server load data to the LBS via gRPC.

Why doesn't the LBS collect this itself? Well, the LBS is already doing a lot.

Imagine there could be thousands of servers. It would need to scale up just to collect metrics from all of them.

So, the proxy has the sole responsibility of collecting server data to reduce the load on the LBS.

The routing database stores server information. Things like weights generated by the LBS, IP addresses, hostname, etc.

Although the LBS stores some data in memory for quick access, an LBS itself can come in and out of existence; sometimes, it crashes and needs to restart.

The routing database keeps data for a long time, so new or existing LBS instances can access it.

Routing databases can either be Zookeeper or etcd based. The decision to choose one or the other may be to support legacy systems.

Sidenote: Zookeeper vs etcd

Both Zookeeper and etcd are what's called a distributed coordination service.

They are designed to be the central place where config and state data is stored in a distributed system.

They also make sure that each node in the system has the most up-to-date version of this data.

These services contain multiple servers and elect a single server, called a leader, that takes all the writes.

This server copies the data to other servers, which then distribute the data to the relevant clients. In this case, a client could be an LBS instance.

So, if a new LBS instance joins the cluster, it knows the exact state of all the servers and the average that needs to be achieved.

There are a few differences between Zookeeper and etcd.

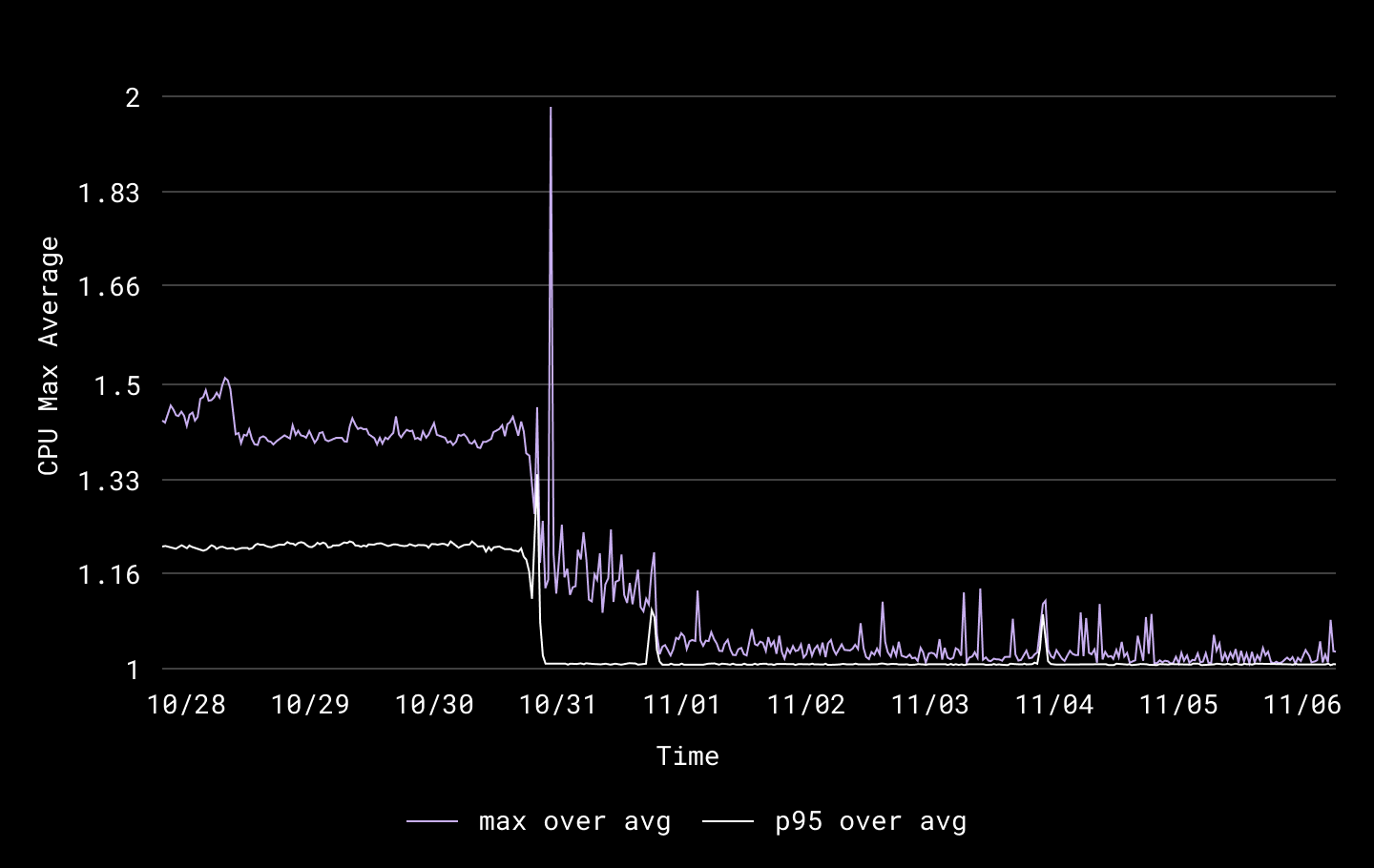

After Dropbox deployed RobinHood to all their data centers, here is the difference it made.

The X axis shows date in MM/DD and the Y axis shows the ratio of CPU usage compared to the average. So, a value of 1.5 means CPU usage was 1.5 times higher than the average.

You can see that at the start, 95% of CPUs were operating at around 1.17 above the average.

It takes a few days for RobinHood to regulate everything, but after 11/01, the usage is stabilized, and most CPUs are operating at the average.

This shows a massive reduction in CPU workload, which indicates a better-balanced load.

In fact, after using Robinhood in production for a few years, the team at Dropbox has been able to reduce their server size by 25%. This massively reduced their costs.

It isn't stated that Dropbox saved millions annually from this change. But, based on the cost and resource savings they mentioned from implementing Robinhood, as well as their size.

It can be inferred that they saved a lot of money, most likely millions from this change.

Wrapping Things Up

It's amazing everything that goes on behind the scenes when someone uploads a file to Dropbox. I will never look at the app in the same way again.

I hope you enjoyed reading this as much as I enjoyed writing it. If you want more details, you can check out the original article.

And as usual, be sure to subscribe to get the next article sent straight to your inbox.

Newsletter Spotlight

If you want to become a more productive tech professional, check out the Tech Productivity newsletter.

It’s full of links and tools you wish you had known about earlier.

PS: Enjoyed this newsletter? Please forward it to a pal or follow us on socials (LinkedIn, Twitter, YouTube, Instagram). It only takes 10 seconds. Making this one took 20 hours.